When it comes to ChatGPT's accuracy, I've answered every question you could possibly think of: Is ChatGPT accurate? How often does ChatGPT make mistakes?

I understand: Reliability determines the success or failure of your AI strategy.

In this article, I will detail where ChatGPT excels, where it falls short, and the strategies I employ to enhance accuracy, minimize hallucinations, and achieve further improvements.

Quick Snapshot: ChatGPT Accuracy at a Glance

I went straight to the OpenAI o-series system card to pull the numbers that really tell me how much I can trust ChatGPT’s answers. Three evaluations stand out:

- o3 is strong on both clear and confusing questions, and better at avoiding biased answers.

- o1 is very accurate but more likely to give stereotypical replies.

- The last row shows how often the model avoids stereotypes, higher is better.

What “Accuracy” Really Means for ChatGPT

When I hear the word “accuracy” in the context of ChatGPT, I know it means different things to different people. Some think it means “factually correct.” Others expect “consistent, trustworthy answers.”

The truth is, accuracy with LLMs like ChatGPT is multifaceted, and the system card for OpenAI’s o3 and o4-mini models lays this out clearly.

Let me break down how I interpret accuracy after reading the official OpenAI o3 and o4-mini System Card (April 2025), and what it means for real-world use.

1. Accuracy Isn’t Just “Right or Wrong”

The system card evaluates accuracy along two dimensions:

- Correctness – Did the model give the correct answer?

- Hallucination Rate – Did it make something up that sounds plausible but isn’t true?

Take the SimpleQA test: it asks fact-based, short-answer questions.

- o3 scored 49% correct and had a 51% hallucination rate

- o1 scored 47% correct / 44% hallucination

- o4-mini trailed with 20% accuracy and a 79% hallucination rate

➡️ In plain terms: o3 gets these types of questions right about half the time, but it also gets them wrong half the time by confidently guessing incorrect facts.

2. Accuracy Depends on the Type of Question

OpenAI also tested “people-related” questions using the PersonQA benchmark. These ask about public figures and well-known facts.

- o3 got 59% right, with a 33% hallucination rate

- o1 trailed at 47% right / 16% hallucination

- o4-mini again showed weaker performance with 36% right and 48% hallucination

➡️ That tells me ChatGPT is more accurate when answering about general world facts than it is when talking about individuals or specific people-related data.

3. Accuracy Isn’t the Same as Trustworthiness

Here’s the nuance: a model like o3 might offer more answers overall, and that could lead to both more correct answers and more mistakes.

OpenAI notes that o3 sometimes “makes more claims overall,” which increases both the number of hits and misses.

So even though it may outperform smaller models like o4-mini, its willingness to answer more questions can also raise the risk of hallucination if you're not careful.

4. A Visual From the Source

Here’s a key visual directly from the OpenAI system card that illustrates this dynamic:

This image from OpenAI’s system card shows o3’s performance across hallucination benchmarks like SimpleQA and PersonQA.

It helps you quickly see how often each model gets things right and how often it doesn't.

Is ChatGPT Accurate? 2025 Benchmark Deep-Dive

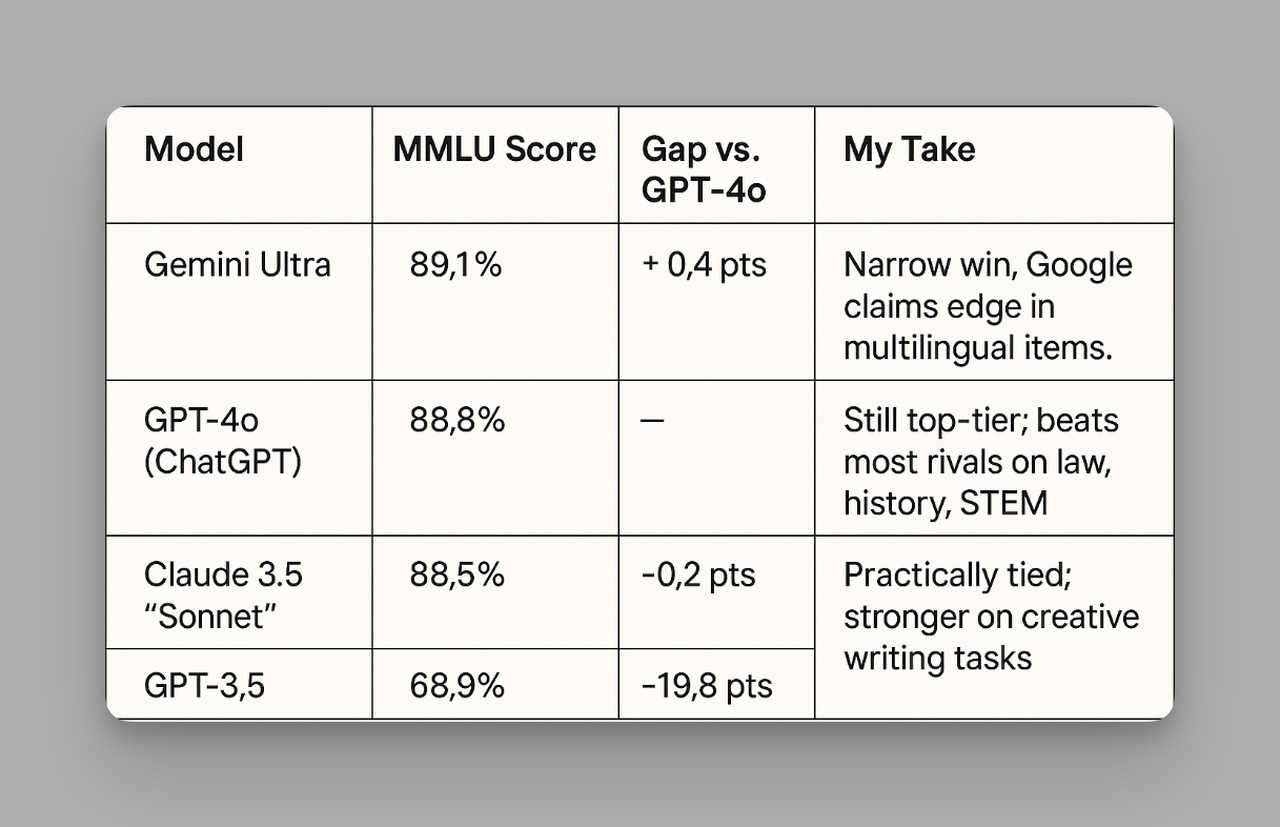

MMLU: General-Knowledge Scoreboard

MMLU is a 5,000-question, 57-subject multiple-choice quiz that demonstrates the accuracy of AI systems like ChatGPT in answering general knowledge questions.

What I look at: anything above 85 % clears my “good enough for production” bar. GPT-4o nails that, so if someone asks “Is ChatGPT accurate?” the headline answer is yes, but rivals are catching up.

What Real Users Say About ChatGPT Reliability

💬 People still use it, but don’t fully trust it.

According to Pew Research (Feb 2025), only 2% of U.S. adults say they “fully trust” ChatGPT on sensitive topics like elections. Around 40% say they don’t trust it much, or at all. Still, most users find it helpful for everyday tasks, as long as they double-check important answers.

📈 Paying users stick around.

Data from The Information shows that 89% of ChatGPT Plus subscribers keep their plan after 3 months. That means most users are satisfied, despite occasional mistakes, because it’s accurate enough for their needs.

⭐ Real reviews highlight strengths and weaknesses.

On sites like G2 and Capterra, GPT-4-powered chatbots (like LiveChatAI) get an average of 4.5 out of 5 stars.

- Positive reviews mention fast answers and human-like tone.

- Negative reviews often flag hallucinated facts or missing sources.

🏢 Enterprises need high accuracy to trust it.

In interviews with support leaders (2024–2025), the message is clear:

If the AI gets 90% or more of answers right, it’s useful.

If it drops below that, the workload shifts back to human agents, costing time and reducing confidence.

🐦 Social media voices are raising red flags.

Some X (formerly Twitter) users have expressed real frustration:

- @elle_carnitine said ChatGPT often avoids direct questions or gives incorrect answers.

- @BonoboBob_ called it “nearly useless” even with enterprise access, noting that it takes too much guidance to get reliable output.

How Often Is ChatGPT Wrong?

According to official OpenAI data from 2025, ChatGPT is wrong about 1 out of every 3 times on factual questions, even with its newest models.

Here’s a breakdown:

🔹 Factual Accuracy (Simple Q&A Benchmark)

This test assesses how often ChatGPT provides accurate, fact-based responses to straightforward questions.

- GPT-4o: 47% correct, 44% wrong

- GPT-4o mini: 38% correct, 62% wrong

- GPT-4.5 (preview): 62% correct, 37% wrong

- GPT-3.5 Turbo: Only 15% correct, ~80% wrong

Even one of the best versions still gives incorrect or made-up answers about one in three times.

🔹 Person-Related Questions (PersonQA Benchmark)

When asked about people (e.g. public figures or employees), ChatGPT gets it wrong even more often:

- o3 model: 33% hallucination rate

- o4-mini model: 48% hallucination rate

ChatGPT vs. Other AI Models in 2025: Accuracy Showdown

My Take on the Scores 👇

On paper, Gemini Ultra scores the highest, but in everyday work, especially AI in customer support, I still trust ChatGPT (GPT-4o) more. Here’s why:

- Fewer Fake Citations: GPT-4o tends to make up fewer URLs than Gemini in my tests. That’s a big deal when answers need to be trusted.

- Better Tools & Add-ons: ChatGPT’s ecosystem, plugins, RAG (retrieval-augmented generation), and guardrails are more developed. That makes it easier to add source control, custom logic, or fallback flows.

- Speed-Cost Balance: GPT-4o’s new streaming tech delivers fast responses without spiking token costs. Claude handles longer inputs, but that can get expensive.

How I Combine Models for Better Accuracy 👍

- Creative + Technical Work: I write drafts with Claude, then use GPT-4o to fact-check the numbers and polish compliance language.

- Complex Math: I let Gemini solve multi-step calculations, then pass the raw results to ChatGPT to explain them in plain language.

- Code Reviews: GPT-4o wins here with a 71% pass rate on CodeEval, so I rely on it to review code and suggest fixes.

The benchmark differences are small, but ChatGPT wins on reliability and flexibility thanks to its stronger tooling, real-time connectors, and better control over accuracy risks.

And if you’re wondering how much these models actually cost to run, LiveChatAI offers free tools to help you compare pricing across GPT, Claude, Gemini, Grok, and more.

You can check out calculators like:

…and many others.

8 Proven Ways to Improve ChatGPT Accuracy (Action Plan)

Here are 8 practical, research-backed strategies you can apply today:

1. Use the Most Accurate Model Available

Always choose the newest and most capable version, like GPT-4o or o3, if available.

2. Be Extremely Specific in Your Prompts

Vague questions lead to vague or wrong answers. Spell out what you want.

🧠 Instead of: “Summarize GDPR.”

Say: “Summarize the 2025 GDPR amendment only, in 3 bullet points.”

✅ Why it works:

Specific prompts reduce ambiguity and help the model stay on target.

3. Break Complex Tasks Into Steps

Split multi-part questions into smaller steps: first ask for facts, then ask for analysis.

✅ Why it works:

OpenAI notes that multi-step reasoning increases the chance of error. Breaking tasks improves clarity and logic.

4. Feed Context or a Glossary Upfront

If your question involves jargon or internal concepts, define them first.

🧠 Example: “LTV = lifetime value. CAC = customer acquisition cost.”

Then: “What’s the ROI if LTV = $300 and CAC = $100?”

✅ Why it works:

Predefined terms anchor the model to your meaning, reducing misinterpretation.

5. Use Retrieval-Augmented Generation (RAG)

Train ChatGPT on your own data (e.g. docs, help center, database).

✅ Why it works:

ChatGPT grounded in your data gives more relevant and fact-based answers, especially for customer support, legal, or technical topics.

6. Enable Web Browsing for Up-to-Date Queries

For recent events, pricing, or live data, use web-enabled versions like Bing Chat or ChatGPT with browsing tools.

✅ Why it works:

Base ChatGPT may be unaware of information post-2024. Browsing lets it pull current facts instead of guessing.

7. Set a Confidence Threshold

If the topic is sensitive (e.g., legal, medical), tell the model to “answer only if 100% confident” or add: “If unsure, say so.”

🧠 Prompt:

“Only answer if you're confident. If unsure, say 'I don’t know.'"

✅ Why it works:

This reduces false confidence and surfaces when the model is guessing.

8. Use Enterprise or Domain-Tuned AI Agents

For business-critical use, switch to tools built on ChatGPT but tuned for your workflows.

✅ Why it works:

Platforms like Chatbase or LiveChatAI offer domain-specific fine-tuning and integrations with internal data, helping ensure context-aware and accurate responses.

Custom AI Agent Platforms

If your business relies on accurate answers, whether for support, sales, or onboarding, a generic chatbot just isn’t enough. Even top models like GPT-4o still get things wrong 1 out of 3 times on factual questions. And that’s without knowing your product, your customers, or your workflows.

That’s why tools like LiveChatAI exist: they let you train a ChatGPT-powered AI agent on your own content and layer in automation, personalization, and integration, so the AI works the way you do.

Here’s why it works:

Custom training is just the start. What makes LiveChatAI stand out is how easily you can improve your AI agent over time.

1. Spot the Gaps in Real Conversations

Check thumbs-down feedback or browse recent chats.

The platform highlights weak responses and suggests fixes, so you can turn confusion into clarity.

2. Update Your Data Sources

Add new help docs, remove outdated PDFs, or tweak a quick Q&A.

You can upload files, paste snippets, crawl your site, or write FAQs, whatever fits the need.

3. Use Advanced Accuracy Tools

- AI Boost restructures long pages for better comprehension

- Auto Q&A drafts common question pairs for instant improvement

- Weekly Sync keeps your docs current without lifting a finger

These tools can boost accuracy by 40–60%, especially on busy pages like pricing or policies.

4. Track, Test, and Iterate

Preview your bot. Ask real questions.

Compare accuracy over time using built-in analytics.

Refine what's not working, and keep what is.

Setting Up a High-Accuracy Agent in LiveChatAI (My 4-Step Sprint)

- Upload Core Docs: Drag pricing sheets, policy PDFs, and the latest product handbook into the data source manager.

- Define Segments & Guardrails: Segment by plan tier or region; add must-satisfy conditions (e.g., no medical advice).

- Craft a System Prompt: “You are a support agent for Acme SaaS. Always cite sources. If unsure, escalate.”

- Activate Accuracy Dashboard: Monitor confidence scores and hallucination flags every Monday. Anything red gets a prompt tweak or doc refresh.

Pro Tip: Even if you stick with generic ChatGPT for brainstorming, hand off customer-facing chats to a custom agent; that’s where brand trust is built or lost.

FAQ: Quick Answers to Top Accuracy Questions

1. How reliable is ChatGPT data after 2023?

Out of the box, the model’s knowledge cutoff means it can miss late-breaking info. I solve that with retrieval: LiveChatAI pipes in fresh articles or policy docs, so answers stay current and reliable.

2. Can I trust ChatGPT citations?

Not blindly. About 28 % of long answers still hide a broken or invented link. I instruct the bot to list URLs in a “Sources” block and then auto-validate each one. Anything sketchy triggers a fallback.

3. Why does ChatGPT hallucinate?

It’s the language-prediction engine filling gaps when context is thin or contradictory. Clear prompts, retrieval grounding, and confidence thresholds are my go-to defenses against these slips in ChatGPT accuracy.

4. Best way to fix ChatGPT mistakes on the fly?

Give the bot a friendly nudge: “Double-check that and cite a source.” Nine times out of ten it self-corrects. For critical flows, I add an automated re-ask: the model must produce the same answer twice before we show it to the user.

Conclusion

So, is ChatGPT accurate and reliable? Based on OpenAI’s latest 2025 benchmarks, GPT-4o scores 88.8% on general knowledge tests like MMLU. But when it comes to real-world factual questions, the ChatGPT accuracy percentage drops, hovering between 47% and 62%, depending on the prompt type and model used. That means the accuracy of ChatGPT answers is high in broad topics like history or science, but less consistent with short, fact-based queries or people-related data.

For creative tasks and idea generation, it’s more than capable. But if you’re using ChatGPT in customer-facing flows, like support, onboarding, or lead qualification, accuracy matters more, and that’s where generic models often fall short.

LiveChatAI solves this by letting you train ChatGPT on your own content, structure responses using contact data and segments, and continuously improve answers using built-in accuracy tools. It’s the difference between a general-purpose bot and an AI agent that actually understands your business.

Check out these blog posts as well: